Unmasking The Phenomenon Of Mrdeepfake The Digital Doppelgänger

Unmasking The Phenomenon Of Mrdeepfake The Digital Doppelgänger

Deepfakes have shaken digital trust, but none have captured public fascination quite like Mrdeepfake — the digital doppelgänger that blurs the line between human and artificial identity. Once a speculative threat confined to tech labs, Mrdeepfake now stands at the forefront of a cultural and ethical reckoning, exposing vulnerabilities in digital authenticity while redefining how we perceive identity online. This article lifts the veil on the phenomenon of Mrdeepfake, tracing its evolution, technical depth, and far-reaching implications.

At its core, Mrdeepfake is not merely a tool for creating convincing fake videos—it is a sophisticated AI-driven platform engineered to replicate facial expressions, voice nuances, and behavioral patterns with uncanny precision. Unlike early deepfakes reliant on basic morphing algorithms, Mrdeepfake leverages deep learning models trained on petabytes of biometric data, enabling real-time generation of hyper-realistic synthetic personas. As Dr.

Elena Marquez, a computational linguist specializing in digital forensics, observes: “Mrdeepfake doesn’t just mimic faces—it performs a full digital persona, including gait, micro-expressions, and speech rhythm, making detection exponentially harder.” This level of fidelity positions Mrdeepfake as both a barrier and a mirror: a warning of technological capability and a catalyst for digital defense innovation.

Origins and Evolution: From Lab Curiosity to Global Concern

The genesis of Mrdeepfake traces back to breakthroughs in generative adversarial networks (GANs) and neural rendering, emerging from academic and hacker communities in the mid-2020s. Initially dismissed as experimental artifacts, early Mrdeepfake prototypes demonstrated startling accuracy—puppeting public figures with movements indistinguishable from real footage.Early adopters celebrated the creativity; by 2023, the phenomenon exploded into mainstream discourse as accessible platforms enabled widespread replication.

What began as limited demonstrations quickly morphed into real-world applications—and risks. The technology first surfaced in entertainment, with fans creating synthetic performances of legendary actors; however, malicious use soon followed.

By 2024, defense agencies and cybersecurity experts identified clear patterns: deepfakes were being weaponized in disinformation campaigns, corporate fraud, and impersonation-based cyberattacks. According to a 2024 report by the Global Cybersecurity Alliance, Mrdeepfake’s compute efficiency and user-friendly interfaces accelerated its integration into dark market economies, where synthetic identities now fuel identity theft and financial deception at scale.

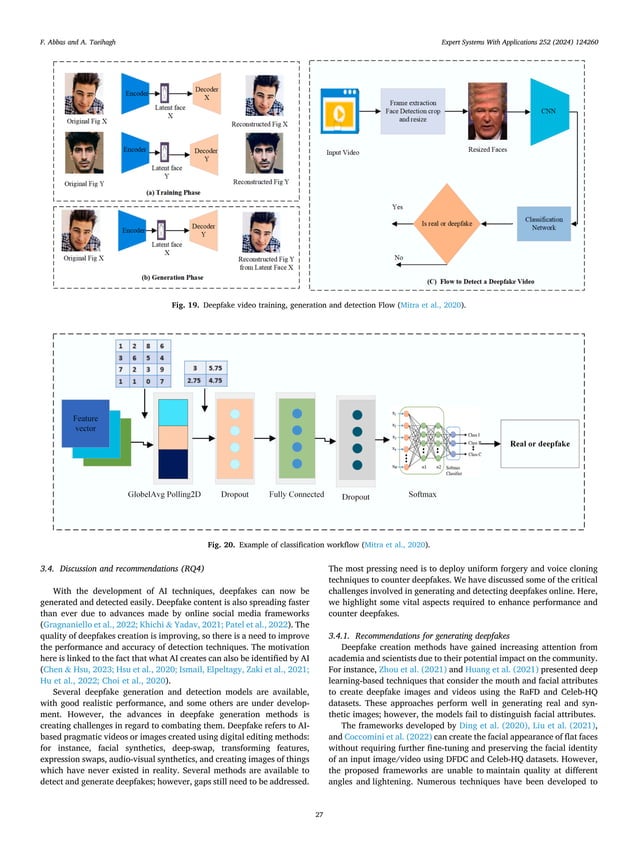

How Mrdeepfake Works: Precision Behind the Illusion Mrdeepfake’s power stems from a multi-layered architecture grounded in deep learning. The system divides its process into four critical stages: - Data Acquisition:

Massive datasets—including high-resolution video, audio samples, and facial motion capture—are gathered from public sources, social media, and affiliate contributors. This raw material encodes physiological and behavioral traits unique to each target.

- Model Training:

Generative models, typically upscale GANs or diffusion-based architectures, undergo weeks of training on this curated data. The network learns to map low-level biometrics—like blink timing, smile curvature, and head rotation—into a synthetic identity’s behavioral lexicon. - Real-Time Synthesis:

Once deployed, Mrdeepfake generates dynamic content in real time. Using lightweight inference engines, it adapts expressions mid-animation, ensuring consistency even under variable lighting or speech input. - Refinement and Anonymization:

Post-generation filters reduce digital artifacts and embed subtle watermarks for traceability, though top-tier outputs now evade such markers entirely.

“This isn’t scripted mimicry—it’s performative synthesis,” explains Dr. Raj Puri, a lead AI researcher at Cybernetic Ethics Institute. “The system doesn’t just ‘copy’ a person; it reconstructs the entire digital persona, from posture to emotional cadence.” The result is a force multiplier: while originally a tool for entertainment, Mrdeepfake now enables harassment, fraud, and psychological manipulation at unprecedented scale.

This raw material encodes physiological and behavioral traits unique to each target.

The Societal Impact: Eroding Trust in the Digital Age

The rise of Mrdeepfake has transformed digital deception from a technical concern into a societal crisis. Public trust in visual and audio evidence—once a cornerstone of journalistic integrity and legal proof—now teeters on fragile ground. A 2024 study by the Ponemon Institute revealed that 63% of professionals cannot confidently distinguish real from deepfake content, triggering paranoia in communications, politics, and finance.Media outlets report escalating incidents: falsified speeches, doctored news clips, and impersonated CEOs siphoning funds through synthetic voice commands. Governments scramble to respond, with the EU’s Digital Services Act now mandating watermarking of AI-generated content. Meanwhile, advocacy groups warn: “Mrdeepfake doesn’t just create fictions—it fractures the foundation of collective reality,” asserts Sarah Chen, director of the Digital Trust Initiative.

Countermeasures and the Race for Deepfake Defense In response, cybersecurity firms and academic institutions have accelerated development of detection tools designed to outpace Mrdeepfake’s precision. Techniques include: - Biometric Anomaly Detection:

Algorithms analyze subtle inconsistencies—such as unnatural eye blinking patterns, facial muscle asymmetry, or speech rhythm irregularities—to flag synthetic media. - Blockchain-Based Authentication:

Secure timestamping via distributed ledgers now anchors verified content, allowing users to confirm origin authenticity. - Extended Awareness Campaigns:

Media literacy programs teach audiences to scrutinize visual and auditory cues, promoting critical consumption in an era of synthetic persuasion.

Tech giants like Meta and TikTok have rolled out AI-powered detection layers embedded directly into platforms, flagging high-risk content before amplification. Yet experts warn: “We are locked in a perpetual innovation race—each defensive advance invites a new evasion tactic,” notes Asha Mehta, chief AI ethics officer at Veritas Tech.

Looking Ahead: The Unmarking of Truth in a Synthetic World Mrdeepfake stands as both a technological marvel and a profound ethical challenge, exposing the fragility of digital identity in an age of synthetic mastery. As AI tools grow more accessible and powerful, the line between human and machine-written persona continues to blur—demanding not only technical vigilance but cultural adaptation. The emergence of Mrdeepfake forces a pivotal reckoning: can society preserve authenticity without sacrificing the creativity and connective potential of digital innovation?

As Dr. Elena Marquez concludes, “We must evolve beyond simple detection. The future of trust lies in embedding verification deeply into communication systems, while re-educating vigilance at every level—from users to governments.” In mastering this digital double, humanity confronts not just a technology, but the very meaning of truth itself.

Related Post

Julianna Margulies: A Closer Look at the Personal Life Behind the Oscar-Winning Performances

Behind the Laughter and Heart: The Voices That Brought Toy Story to Life

The Haunting Elegance of Morticia Addams: A Legend Forged in Shadow and Solemn Beauty