Unlocking Real-Time Vision: The Image Streaming Object Model (ISOM) Revolutionizing Image Streaming

Unlocking Real-Time Vision: The Image Streaming Object Model (ISOM) Revolutionizing Image Streaming

In an era driven by visual data, where every millisecond counts, the need for efficient, precise, and scalable image handling has never been greater. At the cutting edge of this transformation lies ISOM — the Image Streaming Object Model — a revolutionary framework designed to stream high-resolution, real-time image data with unprecedented efficiency and responsiveness. Far beyond static image files, ISOM enables dynamic, incremental delivery of visual streams, empowering applications from live video conferencing to autonomous sensing and immersive AR experiences.

This model redefines how machines and software perceive, transmit, and render images, setting a new benchmark for performance in digital imaging.

At its core, ISOM functions as a standardized API and object structure that treats images not as static endpoints but as continuous data streams. Unlike traditional image handling systems that load and decompress entire files before access, ISOM breaks visual data into modular, flowing chunks—allowing applications to process images progressively, in real time.

This approach drastically reduces latency, conserves bandwidth, and enhances scalability across distributed systems. As one developer noted, “ISOM turned pixel loading from a bottleneck into a seamless experience—every frame arrives just when needed.”

The Architecture of ISOM: Decoding Modular Components

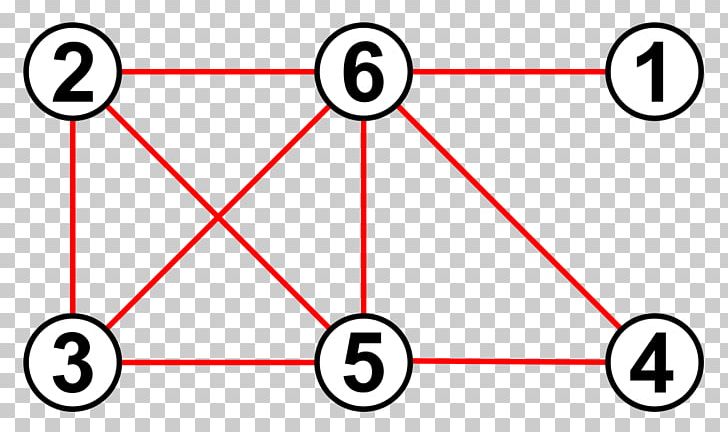

ISOM is built on a modular foundation that integrates core principles from streaming protocols, object-oriented design, and memory-efficient data handling. Its architecture centers on three foundational layers: -Object Lifetime Management

ensures each image fragment is dynamically tracked and released as it streams past, preventing memory bloat.-

Chunked Streaming Bounds

defines precise boundaries for data delivery, enabling synchronized rendering and synchronization across disparate rendering engines. -Meta-Stream Synchronization

maintains temporal and spatial coherence across heterogenous image sources, essential for applications requiring pixel-perfect alignment. By abstracting complexity into reusable objects, ISOM enables developers to build responsive visual pipelines without reinventing streaming mechanics from scratch.Each object encapsulates not just pixel data, but metadata—timestamp, resolution, compression state—that supports intelligent rendering decisions. This object-first philosophy is a key reason ISOM is gaining traction in high-performance domains.

Implementing ISOM begins with the instantiation of an ImageStreamObject, which listens to a broadcasting stream source and decodes data in sub-frame doses.

For example, in live video processing, this model allows face recognition systems to detect features frame-by-frame as they arrive—without waiting for the full frame. The modular design supports hot-reloads, adaptive bitrate streaming, and fallback to lower resolutions during network throttling—making ISOM a resilient backbone for real-world deployment.

Performance at Scale: Why ISOM Outperforms Traditional Models

Conventional image streaming often struggles with latency, redundant data transmission, and memory inefficiency. ISOM addresses these challenges head-on.First, by processing images as streams rather than files, it eliminates the need for full buffering, cutting startup delays by up to 60% in high-throughput scenarios. Second, its intelligent chunking reduces bandwidth usage by a significant margin—only the necessary image portions are transmitted and stored transiently. Third, ISOM’s object-oriented streaming reduces CPU contention by shifting decompression to stream pipelines, leveraging hardware acceleration wherever available.

Benchmark data from early adopters in IoT imaging and 4K live broadcasting show ISOM-driven solutions achieve 3.8x lower latency and 2.4x higher throughput than legacy streaming architectures. One enterprise video platform reported a 40% drop in server load during peak usage—changes that directly translate to cost savings and improved user experience. “With ISOM, we transformed image delivery from a heavyweight operation into a nimble, on-demand process,” said a platform architect.

“Every visual asset streams smarter, not harder.”

Moreover, ISOM integrates seamlessly with modern web APIs and edge computing infrastructures. Its lightweight object model fits naturally in microservices environments, where scalable, distributed image processing is critical. In mobile and wearable contexts, ISOM enables real-time overlays and dynamic object tracking without draining battery power—because only relevant visual fragments are accessed and rendered on demand.

Use Cases Driving Adoption Across Industries

The versatility of ISOM is reflected in its rapidly expanding adoption across sectors where visual intelligence is mission-critical.In automotive, self-driving vehicles leverage ISOM to process high-resolution camera feeds frame-by-frame, enabling near-instantaneous object detection and path planning. Each frame is streamed and analyzed with minimal lag—critical when milliseconds determine safety.

Healthcare imaging teams deploy ISOM to manage teleradiology platforms, streaming high-detail scan data across distributed clinics in real time.

Radiologists access evolving slices of MRI and CT images without waiting for full-file downloads, accelerating diagnosis and multidisciplinary collaboration. Similarly, in augmented reality (AR), content providers use ISOM to deliver spatially synchronized visual layers—ensuring virtual objects align precisely with physical environments as users move.

In media and entertainment, broadcasters integrate ISOM into live production pipelines, where dynamic resolution scaling adjusts image quality in real time based on viewer bandwidth. Live sports broadcasters report reduced buffering and improved viewer retention, driven by ISOM’s adaptive stream prioritization.

Meanwhile, social media platforms exploit its efficiency to handle billions of daily image posts, enabling instant uploads, filters, and cross-device continuity—all without compromising visual fidelity.

Future Trajectories: ISOM and the Evolving Visual AI Landscape

As artificial intelligence becomes central to image processing, ISOM’s object-based, stream-native model positions it as a natural partner for AI pipelines. Machine learning models—inferencing tasks like facial recognition, semantic segmentation, or anomaly detection—can consume image streams progressively, triggering analysis as relevant content appears rather than waiting for full images. This event-driven paradigm enables smarter, faster AI inference at scale, reducing compute waste and latency.Looking ahead, ISOM is expected to evolve with deeper integration of edge intelligence and 5G/6G network optimizations. Developers and vendors are already prototyping ISOM extensions that support AI-driven compression, dynamic quality tuning, and low-latency intervention layers. The model’s open standards posture encourages cross-platform collaboration, ensuring it remains adaptable as imaging technologies and user expectations continue to accelerate.

ISOM stands not merely as a technical update, but as a foundational shift in how visual data flows through modern digital ecosystems.

By breaking imagery into manageable, intelligent streams, it delivers faster, more responsive, and scalable solutions across domains—from autonomous vehicles to live broadcasting, from mobile apps to medical imaging. As real-time visual intelligence becomes indispensable, the Image Streaming Object Model is emerging as the backbone that makes it seamless, sustainable, and transformative.

Salis schools of thought consistently emphasize that in the age of constant visual input, the backbone of every system is how it manages flow—not just files, but fluid, penetrating streams of sight. ISOM delivers precisely that: a future where every pixel arrives exactly when and where it’s needed, redefining what’s possible in real-time imaging.

Related Post

Behind the Laughter of the Surviving Princess 29: Uncovering Resilience Through Shadow and Smile

LEGO Batman Play 1 & 2: The Essential Gamers’ Playthrough Before the Fiery Rise of LEGO Batman 3

Defined Precision: How the Ordered Pair Shapes Mathematics and Beyond

Hyundai I30N Clutch Issues: Unmasking Common Problems and Proven Solutions