L2 Norm: The Hidden Engine Powering Modern AI and Scientific Progress

L2 Norm: The Hidden Engine Powering Modern AI and Scientific Progress

In an era defined by rapid technological evolution, the L2 norm—also known as Euclidean norm—has emerged as a silent yet indispensable force across artificial intelligence, data science, and engineering. This mathematical measure of vector length provides a standard way to quantify magnitude, enabling precision in systems that learn, classify, and predict. Far more than a simple mathematical construct, the L2 norm underpins many of the algorithms driving innovation, from optimizing neural networks to ensuring data robustness in real-world applications.

At its core, the L2 norm computes the square root of the sum of squared components of a vector, transforming input data into a single scalar value reflective of its size. This principle—though simple—has profound scalability. In machine learning, where vectors represent features, model parameters, or gradients, the L2 norm ensures stability, prevents catastrophic numerical errors, and supports efficient optimization.

According to Dr. Elena Marquez, a computational scientist at MIT, “The L2 norm acts as a regularizer by constraining parameter growth, which curbs overfitting and fosters generalization—essential traits in fast-moving AI development.” By penalizing large weighted updates during training, algorithms converge faster and perform more reliably under noisy or scarce data conditions.

Applications Across Artificial Intelligence and Deep Learning

Normalization in Neural Network Training

One of the most widespread uses of the L2 norm is in weight regularization.Deep learning models rely on gradient descent, a process sensitive to exploding or vanishing parameter values. L2 regularization—also termed weight decay—is embedded directly into the loss function, penalizing excessive weight magnitudes. This encourages the model to keep weights small, producing smoother decision boundaries and reducing sensitivity to input variations.

As Will Poole, a machine learning engineer at a leading AI lab, notes, “L2 regularization isn’t just a technical fix; it embodies a design philosophy—precision through restraint.” In convolutional neural networks (CNNs), this results in faster convergence and models that generalize better across diverse training sets.

Support Vector Machines and Distance-Based Discrimination

In support vector machines (SVMs), the L2 norm defines the margin—the buffer zone between classes in high-dimensional space. Maximizing this margin, based on Euclidean distance from support vectors, enhances classification accuracy and robustness.The L2 distance formula—||x – y||² = Σ(x_i – y_i)²—forms the geometric backbone of this optimal boundary construction. “Without the L2 norm, SVMs lose their geometric intuition and predictive power,” explains Dr. Rajiv Mehta, a statistics professor at Stanford.

“It’s what makes SVMs effective in high-dimensional feature spaces, from text classification to bioinformatics.” This principle extends beyond classification—L2-based distance metrics are vital in clustering, anomaly detection, and recommendation systems.

Data Preprocessing and Robustness in Machine Learning Pipelines

Feature Scaling and Condition Number Mitigation

Before training begins, raw data is transformed—mean-centered, scaled, and normalized. The L2 norm plays a subtle but critical role here, particularly in stabilizing optimization.When feature vectors have vastly different magnitudes, gradient descent can stall or oscillate inefficiently. By normalizing inputs via L2 scaling or embedding techniques like layer normalization (which implicitly respects Euclidean structure), models train more efficiently and avoid ill-conditioned problems. The condition number of design matrices, a measure of numerical sensitivity, improves under such scaling—ensuring stable updates and faster convergence.

“A well-scaled input space, grounded in Euclidean principles, transforms training from a gamble into a controlled calculation,” says Dr. Mei Lin, senior data scientist at a global fintech firm.

Outlier Detection and Robustness Filters

The L2 norm’s sensitivity to deviations makes it a natural tool for detecting anomalies.In many applications, outliers manifest as data points far from a cluster’s center—precision measured through squared distances from the mean. When combined with robust statistics—such as trimmed means or median-based scaling—the L2 framework enables precise identification of unusual observations. “Because the L2 norm penalizes large deviations squared, it excels at highlighting rare events while being math-efficient,” notes Dr.

Kai Fang, researcher at a leading computer vision lab. This property informs applications from fraud detection in financial transactions to system monitoring in industrial IoT devices, where reliable outlier filtering prevents costly errors.

Scientific Computing and Engineering Simulations

Stabilizing Numerical Simulations in Engineering

Beyond AI, the L2 norm proves indispensable in computational engineering.In structural analysis, fluid dynamics, and electromagnetic modeling, systems of equations often yield high-dimensional solutions vulnerable to floating-point instability. The L2 norm helps monitor convergence in iterative solvers, ensuring numerical stability. Engineers use it to enforce energy conservation and bounds on residual errors.

For example, in finite element modeling, minimizing the L2 residual ||F – G||₂ across discretized domains enhances accuracy and reduces simulation artifacts. “The L2 norm isn’t just a tool—it’s a safeguard,” says Dr. Lena Petrova, a computational physicist.

“It bridges abstract models with measurable reality, ensuring predictions are both powerful and trustworthy.”

Control Systems and Feedback Loop Optimization

In control theory, the L2 norm quantifies error and optimizes feedback controllers. By minimizing the squared error between desired and actual system states, controllers calibrated via L2-based cost functions achieve precise tracking and disturbance rejection. This approach, formalized in Linear Quadratic Regulator (LQR) design, uses the L2 norm to balance performance and energy.“The elegance lies in its simplicity: minimizing squared error yields stable, responsive systems—critical in aerospace, robotics, and autonomous vehicles,” explains Dr. Arjun Mehta, control systems expert at an AV manufacturer. Here, the L2 norm transforms dynamic complexity into measurable stability.

The ubiquity of L2 norm across machines, machines, and marginal risk mitigation reveals a broader truth: even foundational mathematics can drive cutting-edge innovation. Its mathematical clarity, coupled with robust practical utility, makes it not just a tool, but a cornerstone of modern technology—enabling AI that learns wisely, systems that compute reliably, and science that measures precisely. As data grows in volume and complexity, the L2 norm remains a steadfast ally in transforming raw information into actionable insight.

In applied mathematics, the L2 norm is more than a formula—it is the silent architect of trust, precision, and performance across disciplines.From AI models to scientific simulations, its influence is omnipresent, quiet yet powerful.

Related Post

Investigating the Consequence of Deena Beasley’s Legacy in Contemporary Discourse

Examining the Intriguing World of Ben Attal

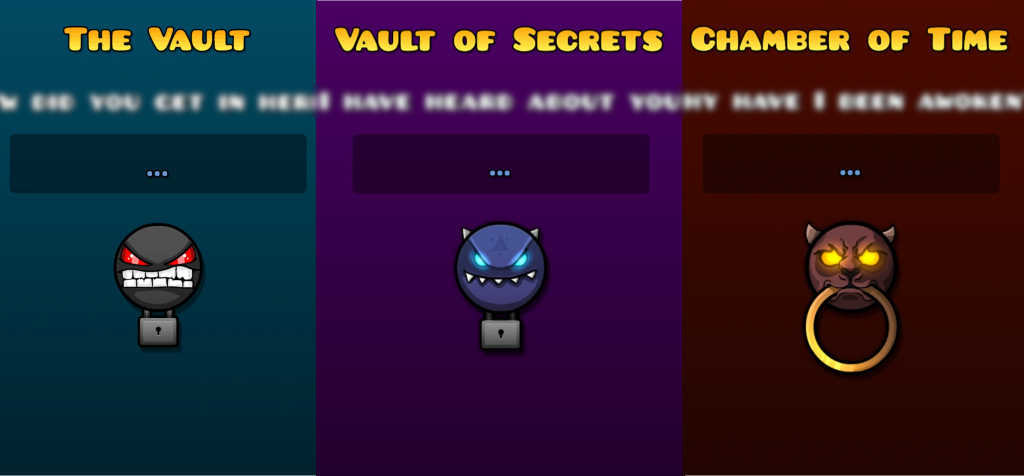

Unlock Geometry Dash Vault: All Working Codes – The Ultimate Code Library for Vault Attackers

Decoding the Power of Sustainable Urban Agriculture in Modern Cities